Transforming Product Improvement and Warranty Cost Reduction with AI

A Practical Playbook for Manufacturers to Adopt AI Without "Perfect Data"

January 4, 2026 | White Paper

For manufacturers seeking to improve financial performance and customer satisfaction, one of the fastest routes to impact is often a product-improvement agenda anchored in warranty cost reduction. Warranty expense weighs directly on margins, and quality failures can escalate into recalls, reputational damage, and a higher total cost of quality.

Yet many engineering and quality organizations delay AI adoption because they believe their data is not "ready." That concern is understandable: traditional manufacturing AI and ML applications—from logistics planning to advanced driver assistance—often depend on structured datasets, extensive validation, and tightly controlled error tolerance.

However, modern AI—particularly workflow acceleration AI—can deliver measurable value without requiring enterprise-wide "perfect data" as a prerequisite. Rather than attempting to unify and cleanse every system upfront, manufacturers can start by targeting the largest sources of delay and cost: fragmented evidence, manual investigation, slow handoffs, and inconsistent supplier recovery execution. Done correctly, this approach strengthens governance and traceability, reduces cycle time, and improves the ability to prevent recurrence—while keeping engineering accountability firmly in place.

This playbook outlines:

- Why warranty and product-improvement value is becoming harder to capture with traditional approaches alone

- Where investigation and closure typically leak cycle time and cost

- The governance model required to deploy AI safely

- Five AI levers that accelerate product improvement and reduce warranty cost

- A practical 90-day pilot approach that proves impact without increasing risk

The challenges of capturing and sustaining value in product improvement

Pressure on quality and warranty performance is intensifying as products become more complex. Several shifts are raising both the frequency and the difficulty of problems:

- Complex system integration. Products increasingly combine mechanical systems with electronics, software, and connectivity—requiring broader expertise to diagnose and resolve failures.

- Configuration and usage diversity. Tailored options, duty cycles, and environments cause products to fail in more unique ways.

- Intermittent failures. Electronics and software introduce unpredictable patterns that are difficult to reproduce and analyze.

- Process speed constraints. Traditional verification and validation processes are often too slow for today's pace of updates and feature releases.

- A data paradox. Organizations have more warranty claims, field records, and operational data than ever before—yet few teams have the tools to access, connect, and leverage unstructured evidence in day-to-day engineering work.

Given these dynamics, many organizations can "improve project by project," but struggle to achieve step changes in cycle time or warranty performance.

The readiness myth: why "perfect data" is the wrong starting point

A common view is that "AI is only as good as the data you feed it," and therefore AI must wait until data is fully integrated and cleansed. In engineering environments, that concern is often reinforced by experience with safety-critical AI applications.

But this framing combines two different categories of AI:

1. Control- and safety-critical AI (embedded perception, closed-loop optimization)

Requires extensive validation, strict performance thresholds, and narrowly bounded behavior.

2. Workflow acceleration AI (evidence retrieval, summarization, clustering, traceable drafting)

Delivers value by reducing the engineering "search + handoff + documentation" tax—under human oversight and auditability.

This playbook focuses on category #2. The goal is not to automate engineering judgment. The goal is to accelerate product improvement by making evidence easier to find, easier to validate, and easier to convert into action—without increasing risk.

Where warranty cost and product-improvement velocity leak

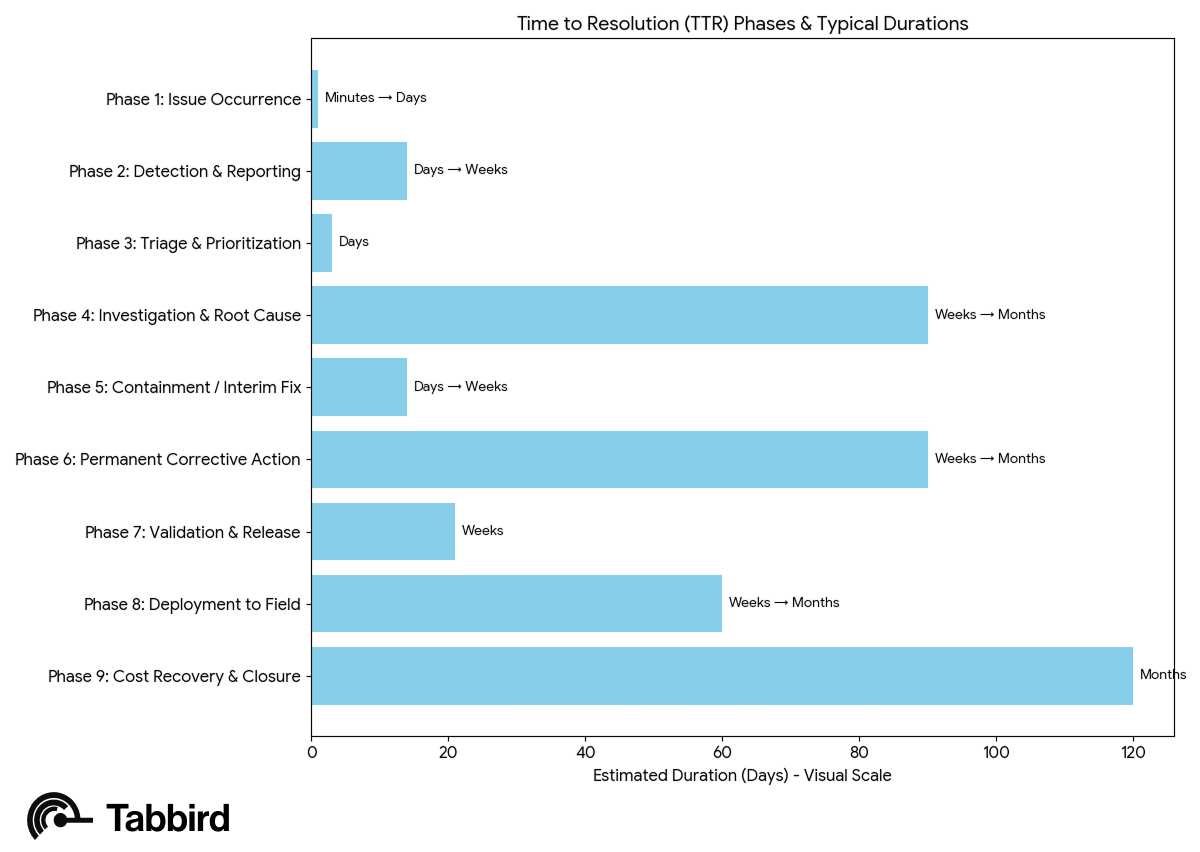

Manufacturers often track time-to-resolution (TTR) or similar measures (time to identify, time to fix). But the most important insight is where cycle time accumulates.

A typical field issue moves through nine phases:

- Field event occurs

- Detection and reporting

- Triage and prioritization

- Investigation and root cause analysis (RCA)

- Containment / interim fix

- Permanent corrective action (design / supplier / manufacturing / software)

- Validation and release

- Deployment to field

- Cost recovery and closure

In many organizations, the largest leakage concentrates in:

- Phase 4: Investigation and RCA (manual evidence gathering and cross-functional alignment)

- Phase 9: Recovery and closure (missed recovery opportunities due to slow evidence packaging and dispute cycles)

Exhibit 1: TTR phases, typical cycle-time distribution, and primary leakage points (Phase 4 and Phase 9)

Why traditional approaches provide the foundation—but are no longer sufficient

Traditional quality approaches (for example, structured root-cause analysis, action tracking, and performance reviews) remain essential. But their effectiveness is increasingly constrained by evidence and workflow realities.

Common failure points include:

- Dashboards without evidence. BI tools support stable reporting, but not situational investigation across text, documents, and attachments.

- Manual RCA at scale. Structured problem solving works, but compiling evidence and aligning stakeholders consumes disproportionate time.

- Knowledge that cannot be reused. Past cases often capture outcomes but not the decision context needed for safe reuse.

- Supplier accountability limited by friction. Recovery execution depends less on policy intent and more on speed, traceability, and evidence quality.

As a result, teams spend too much time preparing information and too little time designing corrective actions and prevention strategies.

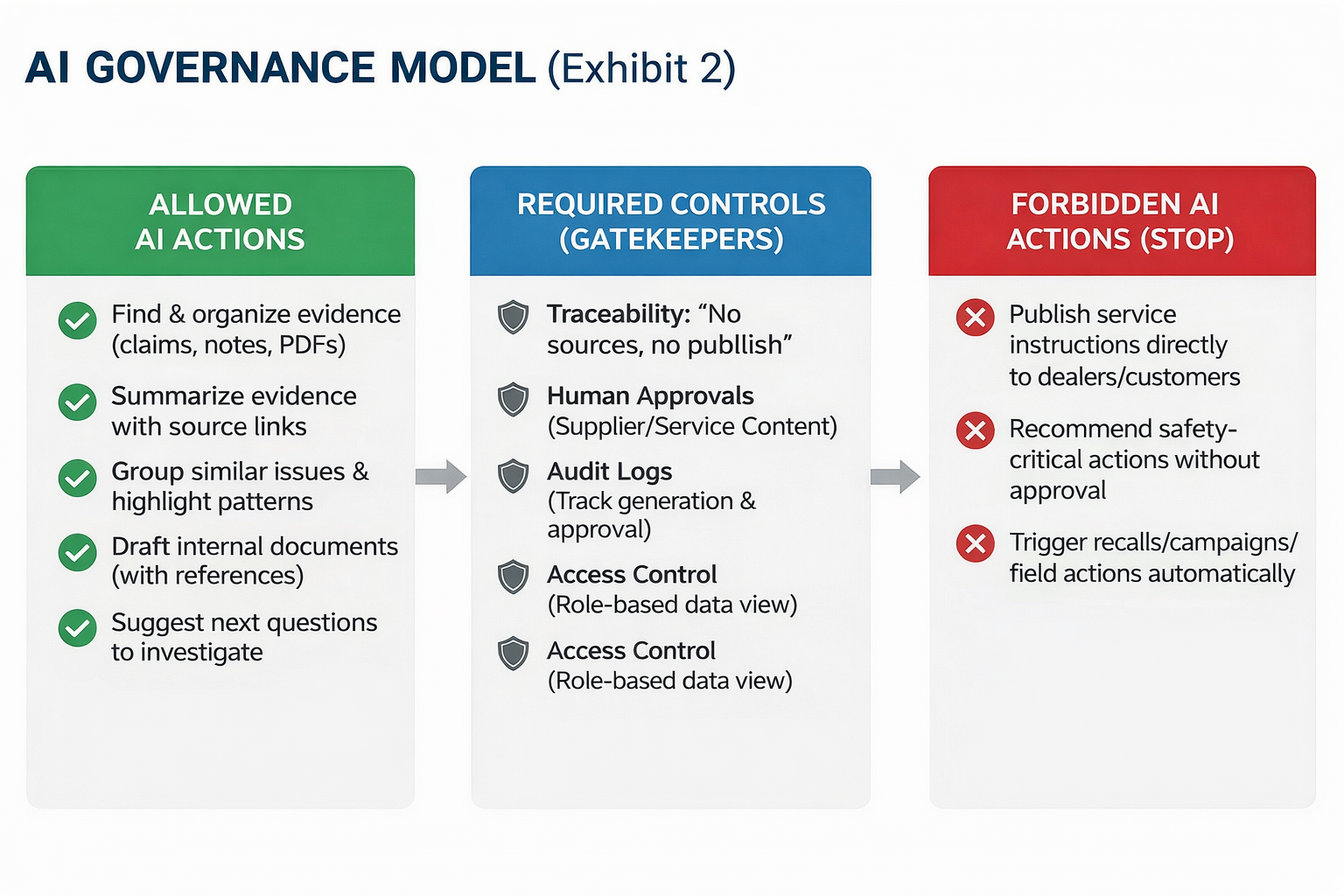

A governance-first model for AI in product improvement

For engineering leaders, adoption succeeds only when AI is deployed under governance that protects safety, compliance, and accountability.

A practical governance model includes:

• Explicit "allowed vs. not allowed" use cases

AI may: retrieve evidence, summarize, cluster, draft internal documents with citations, propose next questions

AI may not: publish service instructions externally, recommend safety-critical actions without approval, or trigger field actions autonomously

• Traceability by default

"No citations, no publish"

• Human approval gates

Required for supplier-facing content, service bulletins, and safety-adjacent decisions

• Auditability

Logs for prompts, outputs, approvals, and data access

• Role-based access control (RBAC) and segmentation

Program/platform separation, supplier segmentation, controlled exposure

Exhibit 2: Governance model—allowed/not allowed use cases, approval workflow, and audit trail

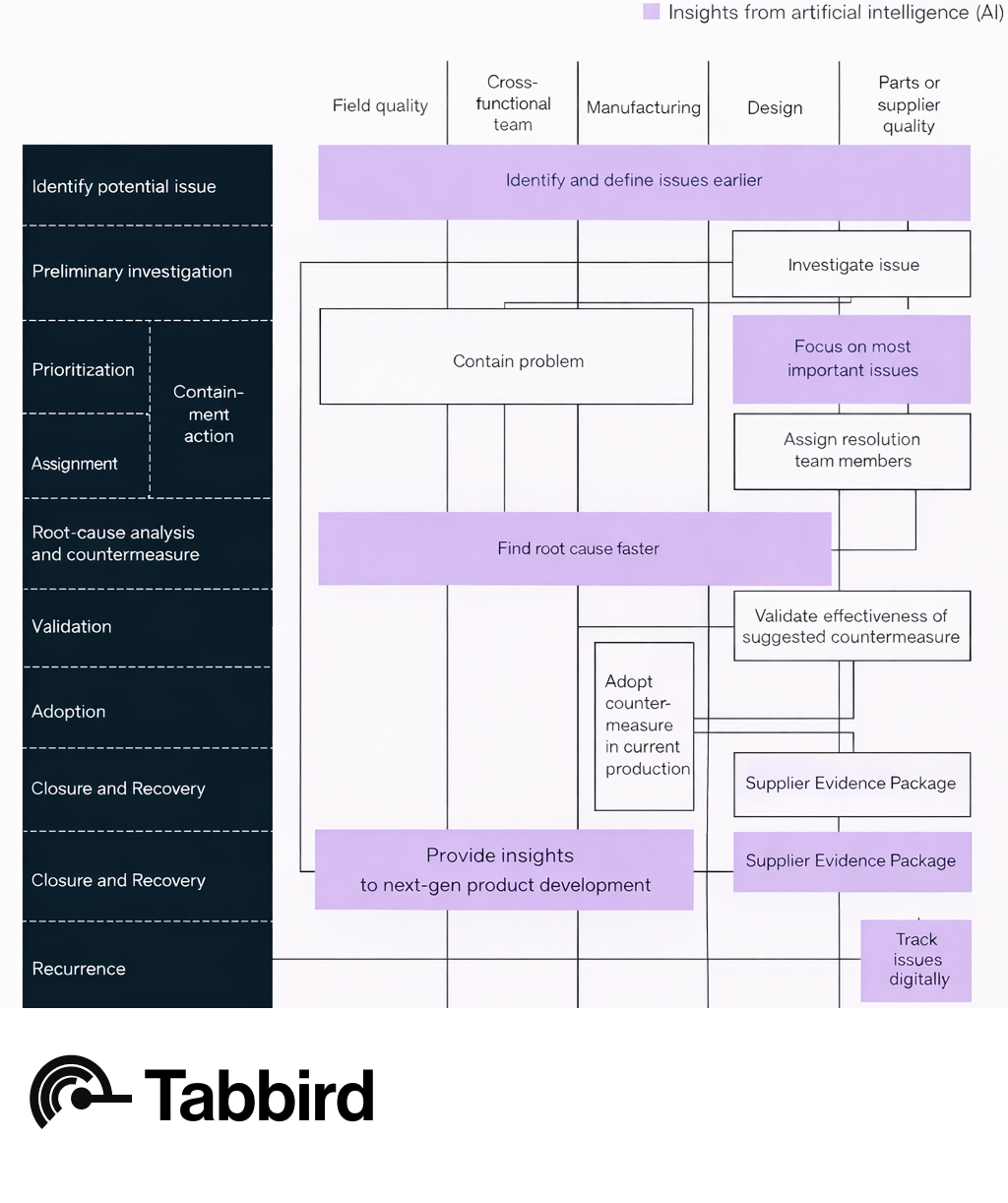

Five AI levers to accelerate product improvement and reduce warranty cost

When integrated into daily processes, AI can deliver tangible benefits through five levers:

Lever 1: Evidence search across unstructured artifacts (Phase 2–4)

What it does: Retrieves relevant claims, technician notes, PDFs, specs, test reports, and prior cases based on engineering context.

Why it matters: Reduces time spent searching and improves consistency of evidence used in RCA.

Lever 2: Failure-mode clustering and micro-trend detection (Phase 3–4)

What it does: Groups similar claims and narratives to detect patterns earlier and reduce duplicate investigations.

Why it matters: Compresses the early investigation cycle and improves prioritization.

Lever 3: RCA workflow acceleration with traceable drafting (Phase 4–6)

What it does: Supports structured RCA methods (8D/5-Why) by drafting sections, linking evidence, and capturing rationale during the work.

Why it matters: Improves documentation quality while lowering administrative burden—strengthening closed-loop learning.

Lever 4: Digital issue tracking that reduces handoffs and rework (Phase 4–8)

What it does: Creates a live, evidence-linked issue board to manage actions, measure fix effectiveness, and reduce "status reconstruction."

Why it matters: Accelerates execution and reduces coordination cost.

Lever 5: Supplier recovery acceleration through evidence packaging (Phase 9)

What it does: Assembles supplier-ready evidence packets with traceability, attribution inputs, and auditable communication trails.

Why it matters: Reduces dispute friction and cycle time—improving recovery effectiveness.

Closed-loop learning: improving prevention, not just resolution

Reducing warranty cost requires preventing recurrence, not only fixing symptoms.

AI strengthens prevention when it:

- Captures evidence and decision context throughout investigation

- Links validated failure modes to design and process updates

- Supports DFMEA/PFMEA updates by turning "lessons learned" into usable, traceable guidance

Over time, this improves product robustness and reduces repeat issues across programs and releases.

How to get started: a 90-day, metrics-first pilot

Manufacturers are more likely to sustain value when AI is integrated into end-to-end product improvement processes and adopted by engineers.

A practical pilot approach includes three steps:

Step 1: Establish baseline and select a pilot domain (Weeks 1–2)

Select an area with:

- Recurring issues, high cost, or long investigation cycles

- Heavy reliance on unstructured evidence

- Meaningful supplier involvement

- Manageable risk under approval gates

Baseline metrics:

- Phase 4 cycle time (start-to-hypothesis; start-to-validated root cause)

- Time spent searching and compiling evidence

- Number of handoffs and rework loops

- Supplier submission cycle time (Phase 9 leading indicator)

Step 2: Deploy evidence search and clustering under governance (Weeks 3–6)

- Start with 2–3 sources (claims, RO notes, top document repository).

- Implement RBAC, citations, and audit logs from day one.

Step 3: Activate RCA workflow and supplier evidence packaging (Weeks 7–12)

- Introduce traceable drafting and approval gates.

- Pilot supplier evidence packaging and track cycle-time improvements.

Exit criteria:

- Measurable reduction in Phase 4 cycle time (for example, 20–30% on the pilot population)

- Reduction in time spent searching/rebuilding context

- Clean governance record (approval gates followed; logs complete)

- Sustained adoption (weekly active users; repeated usage on live cases)

Exhibit 3: Pilot timeline, baseline metrics, targets, governance checkpoints

Where Tabbird fits (implementation example)

Tabbird is designed to operationalize the governed, evidence-first workflow described in this playbook by providing:

- Live issue tracking and recommendations linked to source evidence

- Clustering of unstructured claims and technician notes into failure modes

- Semantic evidence search across narrative and document artifacts

- RCA workflow support with traceable drafting and approval gates

- Supplier recovery evidence packaging with auditable collaboration trails

- Closed-loop learning to strengthen prevention and reduce recurrence

The objective is to accelerate product improvement and reduce warranty cost without increasing safety, compliance, or warranty risk.

Case study: unlocking engineering capacity at a global OEM

An industrial manufacturer faced a critical bottleneck: highly skilled engineers were spending more than two-thirds of their time on repetitive data exploration rather than complex problem solving as new IoT features expanded the volume of available information. Despite heavy investment in connected-fleet infrastructure, large portions of IoT data remained underutilized because manual correlation was too time-consuming.

Intervention. The organization deployed an AI-native resolution workflow to automate the early triage and investigation steps. Engineers used AI data agents to cluster failure modes and validate hypotheses against fleet sensor data.

Impact. Within a three-month pilot, the organization observed measurable shifts:

- Faster time-to-insight: Time to identify plausible root-cause hypotheses from field reports dropped from weeks to minutes, enabling earlier containment and RCA initiation.

- Higher engineering throughput: With repetitive data tasks reduced, the number of top-priority issues engineers could handle increased.

- Deeper data utilization: Usage of IoT datasets rose as engineers could more quickly cross-reference field failures with connected-machine data to validate hypotheses.

- Stronger supplier recovery: Similarity search helped recovery teams identify repeat supplier failures across product lines and configurations, strengthening evidence packages for collaborative RCA and cost recovery.

Conclusion

As products become more complex, traditional quality approaches remain necessary but increasingly constrained by fragmented evidence and process friction. Manufacturers can unlock a step change in product-improvement velocity—and reduce warranty costs—by deploying AI as a governed workflow system that strengthens evidence traceability, accelerates investigation, and improves recovery execution.

The most successful organizations begin with a metrics-first pilot, integrate capabilities into daily workflows, and build internal capabilities that evolve with each product release.

Audio

Listen to this article